HW 7¶

ISE 530 Optimization for Analytics Homework VII: Duality theory. Due 11:59 PM Wednesday November 04, 2020

Describe a dual-based algorithm (not the dual simplex) for solving a linear program with two constraints:

where it is given that \(\sum^n_{i=1} a_i \geq n\alpha\) (this ensures feasibility of the problem). The algorithm can be accomplished in several steps.

Step 1. Letting \(\lambda \geq 0\) and \(\mu\) (un-restricted in sign) be the two dual variables of the respective constraints of (2), form the dual program of the given linear program.

Step 2. The constraints of the dual program suggest that the optimizing \(\lambda\) can be uniquely determined from \(\mu\). Substituting this expression into the objective function of the dual reduces the dual program to a problem in the \(\mu\)-variable alone.

Step 3. The resulting univariate dual program (in \(\mu\)) can be solved by a simple sorting procedure.

Step 4. Use complementary slackness to recover a solution to the primal program.

Extend the procedure in the above exercise to case where the objective function is replaced by \(\sum^n_{i=1} c_i x^2_i\) with the coefficients \(c_i\) being all positive. Apply the resulting procedure to the problem:

Verify your solution graphically by considering the problem as a “nearest-point” problem.

Do Exercise 11.13 in the Cottle-Thapa text. Apply this exercise to the case where each function \(f_j(x_j) = c_jx_j + \frac{1}{2}x^2_j\) and describe a simple procedure for solving the problem. [This exercise shares some similarities with the preceding two problems.]

Do Exercises 11.31, 11.32, and 11.36 in the Cottle-Thapa text.

Determine the minimum objective value and minimizers of the convex program:

Show that the dual function:

is identically equal to zero but this value is not attained, for any \(\mu \geq 0\). This convex program has a positive gap in this generalized sense. Without worrying about the non-attainment issue of the dual function, what goes wrong with this example with reference to the general duality theory?

%load_ext autotime

%load_ext nb_black

%matplotlib inline

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

plt.rcParams["figure.dpi"] = 300

plt.rcParams["figure.figsize"] = (16, 12)

import operator

import pandas as pd

import numpy as np

import cvxpy as cp

import scipy as sp

from sympy import Matrix

from scipy import optimize

Describe a dual-based algorithm (not the dual simplex) for solving a linear program with two constraints:

where it is given that \(\sum^n_{i=1} a_i \geq n\alpha\) (this ensures feasibility of the problem). The algorithm can be accomplished in several steps.

Step 1. Letting \(\lambda \geq 0\) and \(\mu\) (un-restricted in sign) be the two dual variables of the respective constraints of (2), form the dual program of the given linear program.

Step 2. The constraints of the dual program suggest that the optimizing \(\lambda\) can be uniquely determined from \(\mu\). Substituting this expression into the objective function of the dual reduces the dual program to a problem in the \(\mu\)-variable alone.

Step 3. The resulting univariate dual program (in \(\mu\)) can be solved by a simple sorting procedure.

Step 4. Use complementary slackness to recover a solution to the primal program.

Extend the procedure in the above exercise to case where the objective function is replaced by \(\sum^n_{i=1} c_i x^2_i\) with the coefficients \(c_i\) being all positive. Apply the resulting procedure to the problem:

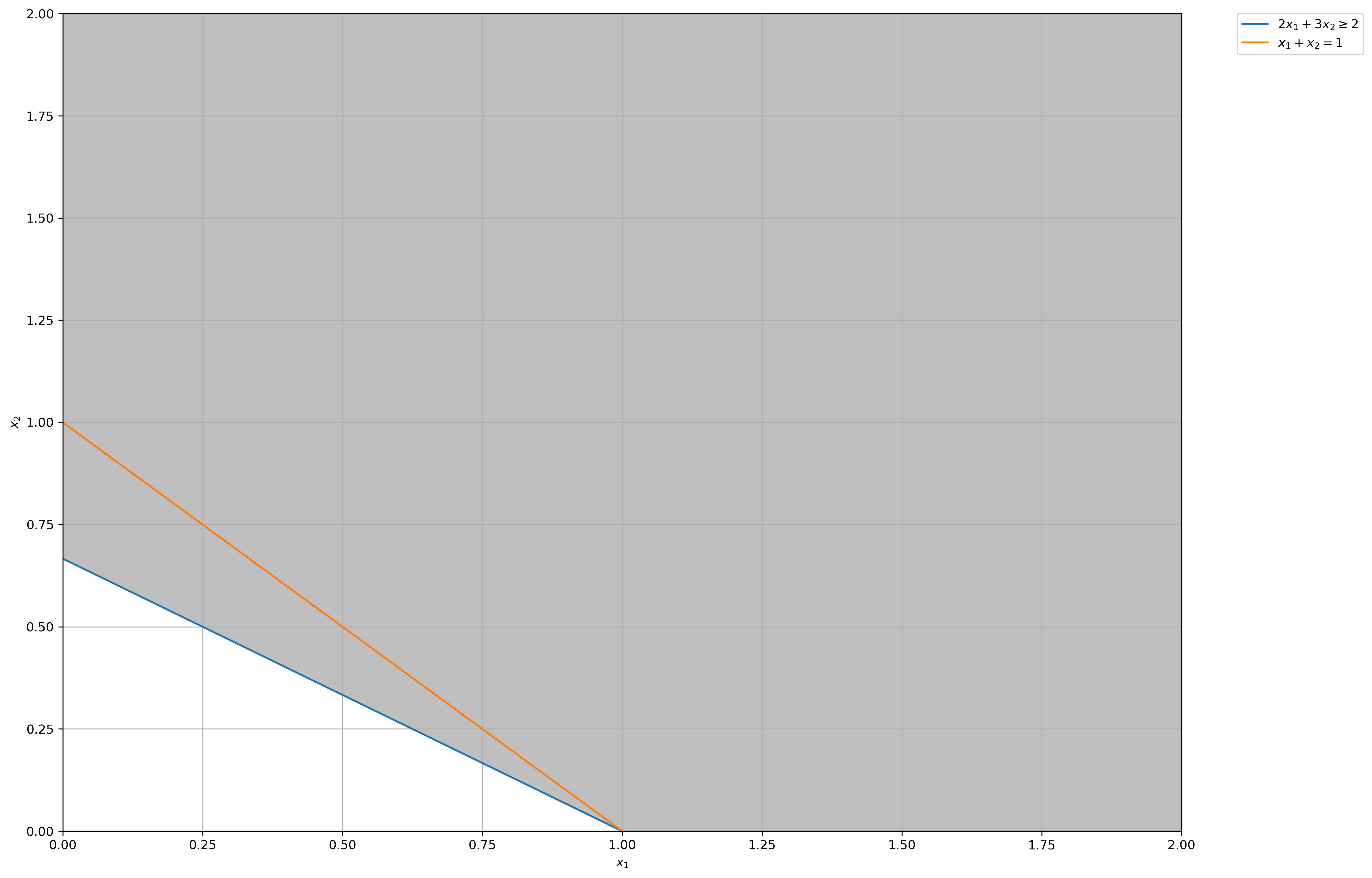

Verify your solution graphically by considering the problem as a “nearest-point” problem.

Step 1 - Dual Program:

From constraint \(3\lambda + \mu = 1\),

Step 2 - Substituting \(\lambda\) into objective function, dual program becomes parameterized by \(\mu\) alone:

Step 3 - Set objective value to 1:

Step 4 - Complementary Slackness:

Lagrangian:

# Construct lines

x_1 = np.linspace(0, 2, 2000) # x_1 >= 0

x_2_1 = lambda x_1: (2 - 2 * x_1) / 3 # constraint 1: 2𝑥1 + 3𝑥2 ≥ 2

x_2_2 = lambda x_1: 1 - x_1 # constraint 2: 𝑥1 + 𝑥2 = 1

# Make plot

plt.plot(x_1, x_2_1(x_1), label=r"$2x_1 + 3x_2 \geq 2$")

plt.plot(x_1, x_2_2(x_1), label=r"$x_1 + x_2 = 1$")

plt.xlim((0, 2))

plt.ylim((0, 2))

plt.xlabel(r"$x_1$")

plt.ylabel(r"$x_2$")

# Fill feasible region

x_2_lb = np.maximum(x_2_1(x_1), np.zeros(len(x_1)))

x_2_ub = np.array([2] * len(x_1))

plt.fill_between(x_1, x_2_lb, x_2_ub, where=x_2_ub > x_2_lb, color="grey", alpha=0.5)

plt.grid(True)

plt.legend(bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.0)

plt.show()

time: 1.52 s

The minimum squared euclidean norm satisfying the constraint of \(x_1 + x_2 = 1\) above (perpendicular to the \(x_1 + x_2 = 1\) hyperplane) is \(x^*_1 = 0.5, x^*_2 = 0.5\), hence the solution we found via complementary slackness is verified optimal.

11.13¶

A function \(f: \mathbb{R}^n \rightarrow \mathbb{R}\) is said to be separable if it can be written as the sum of functions of a single real variable, that is

(a) Let \(f\) be a differentiable separable function and consider the nonlinear program

where \(\sigma\) is a positive scalar. Write the first-order conditions for a local minimizer \(\bar{x}\) in this nonlinear program.

Lagrangian:

First Order conditions for a local minimizer \(\bar{x}\) in this nonlinear program:

\(\mu\) is free.

(b) Recall that if \(x \in \mathbb{R}^n\), the set \(\{j : x_j \not= 0\}\) is called the support of \(x\), denoted by \(\sup(x)\). Show that every feasible solution in part (a) has a nonempty support.

Since we know that \(\sigma\) is a positive scalar, \(x_j \geq 0\), and a feasible solution to part (a) must satisfy the constraint \(\sum^{n}_{j=1} x_j = \sigma\), one of the \(x_j\)s MUST be positive, hence any feasible solution will have a nonempty support \(\blacksquare\).

(c) Show that there is a real number \(\kappa\) such that if \(x^∗\) is a local minimizer of the nonlinear program in part (a), then for all \(j \in \sup(x^∗)\)

As shown in the first order conditions, the partial derivative (simply the derivative since the function is of just one variable), must be 0. Hence \(\kappa = 0\).

Do Exercise 11.13 in the Cottle-Thapa text. Apply this exercise to the case where each function \(f_j(x_j) = c_jx_j + \frac{1}{2}x^2_j\) and describe a simple procedure for solving the problem. [This exercise shares some similarities with the preceding two problems.]

Since the gradients of each function \(f_j(x_j)\), \(f^\prime_j(x_j) = 0\), we can just set each \(x_j = -c_j\). Since coefficient of \(x^2_j\) in \(f_j(x_j)\) is \(> 0\), the hessian of this univariate function is positive definite, hence function is strictly convex, and since our function for the optimization problem is a sum composition of striclty convex functions, it is also strictly convex, hence our solution will be the global minimizer.

11.31¶

Find all extremal values of \(z\), and the corresponding values of \(x_1\) and \(x_2\), using the method of Lagrange multipliers in the following two instances.

(a)

Finding infimum of Lagrangian:

Case I: \(\lambda = \frac{1}{2} \rightarrow x_1 = -x_2\)

Case II: \(\lambda = -\frac{1}{2} \rightarrow x_1 = x_2\)

(b)

Finding infimum of Lagrangian:

Primal Feasibility:

Case I: \(\lambda = \sqrt{\frac{5}{52}} \rightarrow x^* = -\sqrt{\frac{52}{5}}\begin{bmatrix} \frac{1}{2} \\ 1 \\ \end{bmatrix}\)

Case II: \(\lambda = -\sqrt{\frac{5}{52}} \rightarrow x^* = \sqrt{\frac{52}{5}}\begin{bmatrix} \frac{1}{2} \\ 1 \\ \end{bmatrix}\)

11.32¶

Consider the following univariate optimization problem

(a) Form the Lagrangian.

Lagrangian function:

(b) Find the dual of the problem.

Lagrangian Dual Function:

Dual Problem:

Finding infimum of Lagrangian:

Lagrangian Dual Function:

Substituting into Dual Problem:

Supremum of Dual function:

Case I: \(\lambda^* = -6\)

Rejected, since \(\lambda \geq 0\) constraint.

Case II: \(\lambda^* = 4\)

Optimal Dual Objective value:

(c) Verify the Weak Duality Theorem for this problem.

Primal Objective value:

With \(x^* = \frac{5\lambda}{(2 + 2\lambda)}\) and \(\lambda^* = 4\),

Since solution to primal (4) is \(\geq\) solution to dual (4), weak duality holds.

(d) Does Strong Duality hold, or is there a duality gap?

Since the duality gap is 0 as optimal objective value for both primal and dual problem is the same, Strong duality holds.

11.36¶

Use the Lagrangian dual process to show that

is an empty set.

Suppose the following maximization problem

Lagrangian:

First order conditions:

Primal Feasibility:

Hence,

This makes the optimal objective value to the maximization problem: \(3\Big(\frac{2}{3}\Big)^2 = \frac{4}{3}\). Hence, since maximium value is not \(> 1\), the intersection of the 2 constraints is an empty set. \(\blacksquare\)

Determine the minimum objective value and minimizers of the convex program:

Show that the dual function:

is identically equal to zero but this value is not attained, for any \(\mu \geq 0\). This convex program has a positive gap in this generalized sense. Without worrying about the non-attainment issue of the dual function, what goes wrong with this example with reference to the general duality theory?

Lagrangian:

Lagrangian Dual Function:

Finding infimum of Lagrangian:

Hence, since \(x\) can take on any value and \(y=0\), the dual function reduces to:

If we choose \(x \rightarrow -\infty\), \(d(\mu) \rightarrow -\infty\) and dual function is unbounded below.

Under Weak Duality Thm, we know that the optimal dual value is a lower bound on the optimal primal value, but since our optimal dual value here is \(-\infty\), duality theory is useless in helping us find the optimal primal value to the problem.