HW 2¶

ISE-530 Homework II: Chapters III and IV of Cottle-Thapa. Due 11:59 PM Wednesday September 23, 2020

Exercises 3.3, 3.4, and 3.5.

Exercises 4.1(a), 4.11, 4.12, 4.14 and 4.18.

Formulate a linear program for the planar absolute-value regression problem given the 5 points: (-1,-2), (0,0), (1,1), (2,3), and (3,0). Write an AMPL code for the resulting linear program and solve it by a solver.

Use the same 5 points and same objective as above to determine a planar isotone (i.e, nondecreasing) curve that best fits the given points. Do the same with a convex curve instead. Solve both linear programs using AMPL.

%load_ext nb_black

%matplotlib inline

import matplotlib.pyplot as plt

plt.rcParams["figure.dpi"] = 300

import numpy as np

import cvxpy as cp

from collections import namedtuple

Chapter 3¶

3.3¶

Consider the system of equations

(a) Write the matrix \(A\) and the vector \(b\) such that the given system of equations is the verbose form of \(Ax = b\).

Define:

(b) Identify the matrix \(B = [A_{\bullet3} A_{\bullet2} A_{\bullet4}]\) and verify that it is a basis in \(A\).

After performing elementary row operations, we see that matrix \(B\)’s column space contain 3 linearly independent column vectors which span \(\mathbb{R}^3\), making it a basis for \(A\).

(c) Compute the basic solution associated with the matrix \(B\) (in part(b) above) and verify that it is a nonnegative vector.

Define:

(Personal Note: If A was the detached coefficient matrix representing constraints, The corresponding basic solution with matrix \(B\) is a basic solution when we set \(x_N = 0\) and solve for \(x_B\), and is a basic feasible solution iff \(x_B = B^{-1}b \geq 0\).)

Hence, basic solution:

\(\therefore\) basic solution associated with matrix \(B\) is a non-negative vector.

(d) What is the index set \(N\) of the nonbasic variables in this case? [Hint: Although it is not absolutely necessary to do so, you may find it useful to compute the inverse of B in this exercise.]

\(N = \{1, 5\}\)

3.4¶

Consider the LP

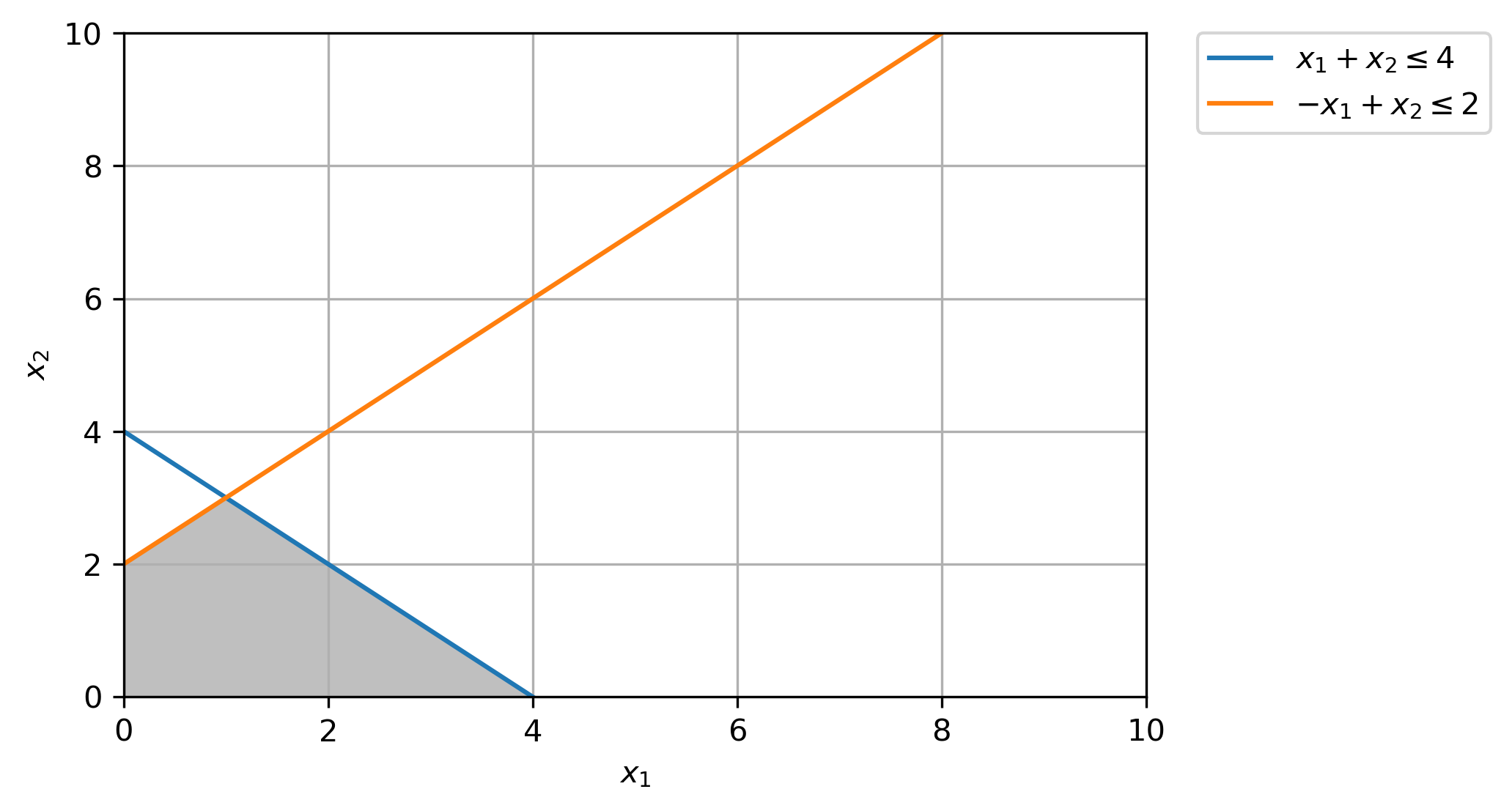

(a) Plot the feasible region.

# Create one vector optimization variable.

x = cp.Variable((2,), integer=False)

# Create constraints.

constraints = [

np.array([[1, 1], [-1, 1]]) @ x <= np.array([4, 2]),

x >= 0,

]

# Form objective.

obj = cp.Maximize(np.array([1, 2]) @ x)

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"The optimal solution is: x = {[np.round(x_i, 2) for x_i in x.value]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: 7.0

The optimal solution is: x = [1.0, 3.0]

# Construct lines

x_1 = np.linspace(0, 20, 2000) # x_1 >= 0

x_2_1 = lambda x_1: -x_1 + 4 # constraint 1: 𝑥1 + 𝑥2 ≤ 4

x_2_2 = lambda x_1: x_1 + 2 # constraint 2: −𝑥1 + 𝑥2 ≤ 2

# Make plot

plt.plot(x_1, x_2_1(x_1), label=r"$x_1 + x_2 \leq 4$")

plt.plot(x_1, x_2_2(x_1), label=r"$-x_1 + x_2 \leq 2$")

plt.xlim((0, 10))

plt.ylim((0, 10))

plt.xlabel(r"$x_1$")

plt.ylabel(r"$x_2$")

# Fill feasible region

y = np.minimum(x_2_1(x_1), x_2_2(x_1))

x = np.zeros(len(x_1))

plt.fill_between(x_1, x, y, where=y > x, color="grey", alpha=0.5)

plt.grid(True)

plt.legend(bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.0)

plt.show()

(b) Identify all the basic solutions and specify which are feasible and which are infeasible.

For a linear inequality system (including equalities) in \(n\) variables, a feasible solution is basic if there are \(n\) linearly independent active constraints at the given solution.

Basic Solutions:

\((x_1=0, x_2=0)\), 2 active constraints, \(x_1=0\geq0\) & \(x_2=0\geq0\) [Feasible]

\((x_1=0, x_2=2)\), 2 active constraints, \(x_1=0\geq0\) & \(−x_1+x_2=0+2=2\leq 2\) [Feasible]

\((x_1=1, x_2=3)\), 2 active constraints, \(x_1+x_2=1+3=4\leq 4\) & \(−x_1+x_2=-1+3=2\leq 2\) [Feasible]

\((x_1=4, x_2=0)\), 2 active constraints, \(x_1+x_2=4+0=4\leq 4\) & \(x_2\geq0\) [Feasible]

(c) Solve the problem using the Simplex Algorithm.

Standard Form:

From the Canonical Tableau:

\(\therefore\) Since the reduced costs are all \(\leq 0\) for a minimization problem, our simplex method is complete, with basic variables \(x_1 = 1, x_2 = 3, s_1 = 0, s_2 = 0\) and our maximum objective value is \(7.0\).

3.5¶

Consider the LP

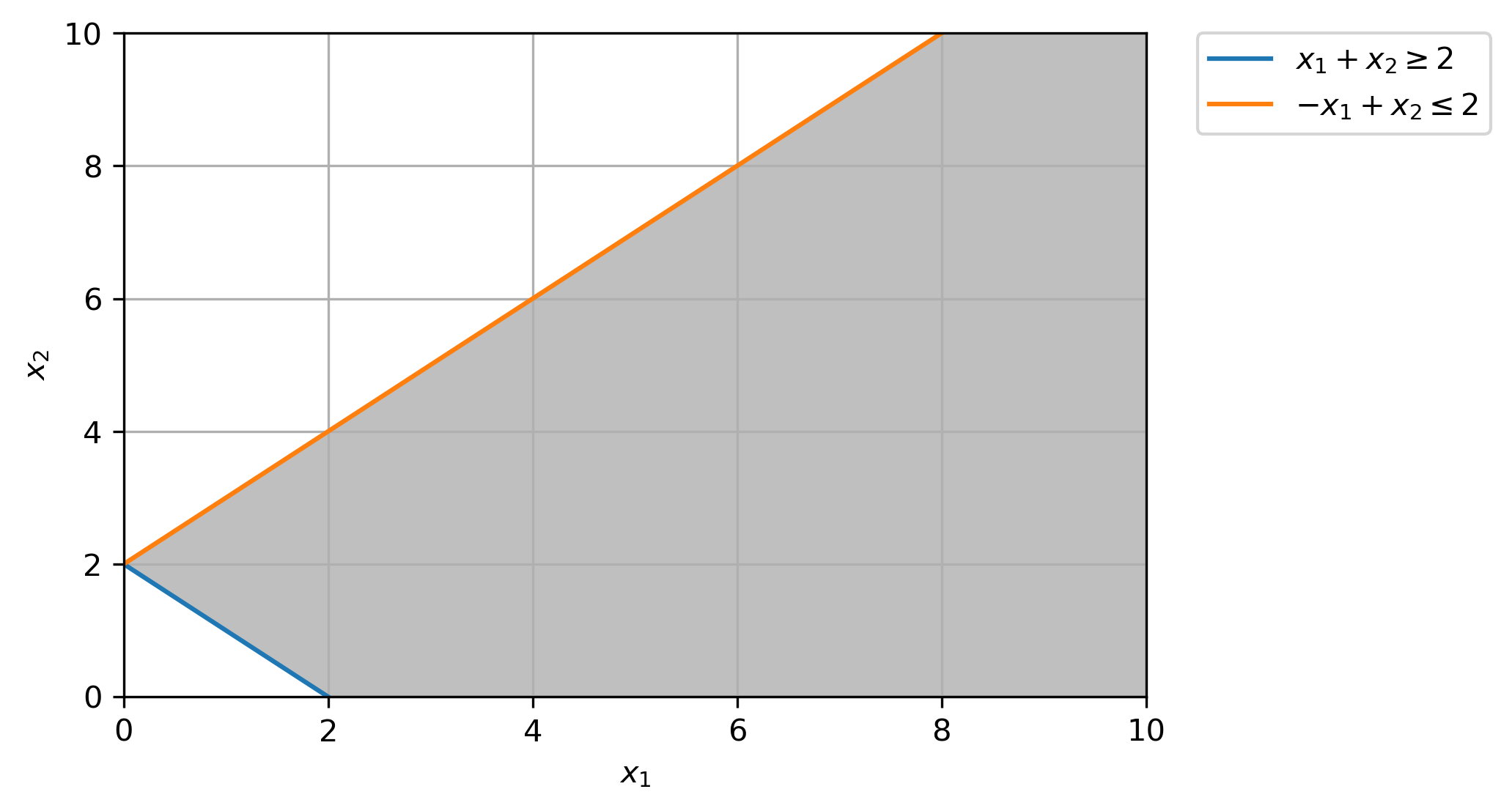

(a) Plot the feasible region.

# Construct lines

x_1 = np.linspace(0, 20, 2000) # x_1 >= 0

x_2_1 = lambda x_1: -x_1 + 2 # constraint 1: 𝑥1 + 𝑥2 ≥ 2

x_2_2 = lambda x_1: x_1 + 2 # constraint 2: −𝑥1 + 𝑥2 ≤ 2

# Make plot

plt.plot(x_1, x_2_1(x_1), label=r"$x_1 + x_2 \geq 2$")

plt.plot(x_1, x_2_2(x_1), label=r"$-x_1 + x_2 \leq 2$")

plt.xlim((0, 10))

plt.ylim((0, 10))

plt.xlabel(r"$x_1$")

plt.ylabel(r"$x_2$")

# Fill feasible region

y = x_2_2(x_1)

x = np.maximum(x_2_1(x_1), np.zeros(len(x_1)))

plt.fill_between(x_1, x, y, where=y > x, color="grey", alpha=0.5)

plt.grid(True)

plt.legend(bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.0)

plt.show()

(b) Identify all the basic solutions and specify which are feasible and which are infeasible.

For a linear inequality system (including equalities) in \(n\) variables, a feasible solution is basic if there are \(n\) linearly independent active constraints at the given solution.

Basic Solutions:

\((x_1=0, x_2=0)\), 2 active constraints, \(x_1=0\geq0\) & \(x_2=0\geq0\) [Infeasible]

\((x_1=2, x_2=0)\), 2 active constraints, \(x_1 + x_2 =2+0=2\geq 2\) & \(x_2=0\geq0\) [Feasible]

(c) Solve the LP by the Simplex Algorithm and show that the algorithm stops with the objective function being unbounded over the feasible region.

Standard Form:

From Phase I Tableau:

This tableau reveals that w, the value of the artificial objective function, has been reduced to zero. Now, in the computation, the 1st row and the \(y_1\) column can be ignored (or deleted from the tableau). We can also pick out the basic feasible solution \((x_1, x_2, s_1, s_2) = (2, 0, 0, 4)\) of the original system that has been found by the Phase I Procedure.

Phase II: Normal Simplex Method

\(\therefore\) Since, we will choose the entering variable as \(s_1\), but the column of \(s_1\) has no positive entries, the linear program is unbounded.

(d) Modify the first inequality constraint to be x1 + x2 ≤ 2 and show that there are infinite optimal solutions.

Standard Form:

Canonical Tableau:

\(\therefore\) Since the reduced costs are all \(\leq 0\) for a minimization problem, our simplex method is complete, with basic variables \(x_1 = 2, s_2 = 4\) and non-basic variables \(x_2=0, s_1=0\) and our maximum objective value is \(2.0\).

# Create one vector optimization variable.

x = cp.Variable((2,), integer=False)

# Create constraints.

constraints = [

np.array([[1, 1], [-1, 1]]) @ x <= np.array([2, 2]),

x >= 0,

]

# Form objective.

obj = cp.Minimize(np.array([-1, -1]) @ x)

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"The optimal solution is: x = {[np.round(x_i, 2) for x_i in x.value]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: -2.0

The optimal solution is: x = [1.0, 1.0]

Chapter 4¶

4.1(a)¶

Use Phase I and Phase II of the Simplex Algorithm to solve the linear program

Do the computations “by hand.”

From Phase I Tableau:

This tableau reveals that w, the value of the artificial objective function, has been reduced to zero. Now, in the computation, the 1st row and the \(y_1, y_2\) columns can be ignored (or deleted from the tableau). We can also pick out the basic feasible solution \((x_1, x_2, x_3) = (0, \frac{25}{11}, \frac{2}{11})\) of the original system that has been found by the Phase I Procedure.

Phase II: Normal Simplex Tableau

\(\therefore\) Since the reduced costs are all \(\leq 0\) for a minimization problem, our simplex method is complete, with basic variables \(x_2 = \frac{25}{11}, x_3 = \frac{2}{11}\), non-basic variables \(x_1 = 0\) and our minimum objective value is \(-\frac{56}{11}\).

# Create one vector optimization variable.

x = cp.Variable((3,), integer=False)

# Create constraints.

constraints = [

np.array([[1, 2, -3], [2, 3, 1]]) @ x == np.array([4, 7]),

x >= 0,

]

# Form objective.

obj = cp.Minimize(np.array([3, -2, -3]) @ x)

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"The optimal solution is: x = {[np.round(x_i, 2) for x_i in x.value]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: -5.09

The optimal solution is: x = [0.0, 2.27, 0.18]

4.11¶

Solve the following linear program by the Simplex Method

Standard Form:

From the Canonical Tableau:

\(\therefore\) Since the reduced costs are all \(\leq 0\) for a minimization problem, our simplex method is complete, with basic variables \(x_2 = 6, x_3 = 2, s_1 = 4\), non-basic variables \(x_1 = 0, x_4 = 0, s_2 = 0, s_3 = 0\) and our minimum objective value is \(-44.0\).

# Create one vector optimization variable.

x = cp.Variable((4,), integer=False)

# Create constraints.

constraints = [

np.array([[2, 1, 1, -2], [0, 2, -2, 1], [4, 1, 2, 3]]) @ x <= np.array([12, 8, 10]),

x >= 0,

]

# Form objective.

obj = cp.Minimize(np.array([-4, -8, 2, 4]) @ x)

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"The optimal solution is: x = {[np.round(x_i, 2) for x_i in x.value]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: -44.0

The optimal solution is: x = [-0.0, 6.0, 2.0, -0.0]

4.12¶

Solve the following linear program by the Simplex Method

Standard Form with Slack and Artificial Variables:

From the Phase I Tableau:

This tableau reveals that w, the value of the artificial objective function, has been reduced to zero. Now, in the computation, the 1st row and the \(y_1, y_2, y_3\) columns can be ignored (or deleted from the tableau). We can also pick out the basic feasible solution \((x_1, x_2, x_3, s_1, s_2, s_3) = (0, 0, 8, 0, 4, 0)\) of the original system that has been found by the Phase I Procedure.

Phase II: Normal Simplex Tableau

\(\therefore\) Since the reduced costs are all \(\leq 0\) for a minimization problem, our simplex method is complete, with basic variables \(x_2 = 6, x_1 = 8, s_2 = 20\), non-basic variables \(x_3 = 0, s_1 = 0, s_3 = 0\) and our minimum objective value is \(32.0\).

# Create one vector optimization variable.

x = cp.Variable((3,), integer=False)

# Create constraints.

constraints = [

np.array([[2, -4, -1], [-1, -4, -2], [-2, 0, -2]]) @ x <= np.array([-8, -12, -16]),

x >= 0,

]

# Form objective.

obj = cp.Minimize(np.array([1, 4, 6]) @ x)

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"The optimal solution is: x = {[np.round(x_i, 2) for x_i in x.value]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: 32.0

The optimal solution is: x = [8.0, 6.0, 0.0]

4.14¶

Solve the following linear program by the Revised Simplex Method

Standard Form:

Phase I Tableau:

This tableau reveals that w, the value of the artificial objective function, has been reduced to zero. Now, in the computation, the 1st row and the \(y_1, y_2\) columns can be ignored (or deleted from the tableau). We can also pick out the basic feasible solution \((x_1, x_2, x_3, x_4, s_1) = (10/3, 20/3, 0, 0, 0, 0)\) of the original system that has been found by the Phase I Procedure.

Revised Simplex Method:

Test for Optimality

Find Pricing Vector \(y = c_B B^{-1}\)

Compute \(z_j = c_B B^{-1}N\)

Find non-basic index \(j\) such that \(z_N - c_N = \text{max}_{j\in N}{\{z_j - c_j\}}\). If \(z_j - c_j \leq 0\), stop, current BFS is optimal

Min. Ratio Test

Find \(\bar{A}_{\bullet s} = B^{-1}A_{\bullet s}\)

If \(\bar{a}_{is} \leq 0\) for all \(i\), stop. The objective is unbounded below. Otherwise, find an index \(r\) such that \(\bar{a}_{is} > 0\) and \(\frac{\bar{b}_r}{\bar{a}_{rs}} = \text{min}\Big\{\frac{\bar{b}_i}{\bar{a}_{is}}: \bar{a}_{is} > 0 \Big\}\)

Update new basis, \(A_{\bullet {j_r}}\) replaces \(B_{\bullet {j_r}}\)

Iteration 1:

1A.

1B.

1C.

2A.

2B.

When all reduced cost coefficients are \(\leq 0\), BFS is optimal, hence solution is \((x_1, x_2, x_3, x_4) = (0, 5, 0, 5)\)

# Create one vector optimization variable.

x = cp.Variable((4,), integer=False)

# Create constraints.

constraints = [

np.array([[1, 1, -2, 1]]) @ x == np.array([10]),

np.array([[4, 1, 2, 3]]) @ x >= np.array([20]),

x >= 0,

]

# Form objective.

obj = cp.Minimize(np.array([3, 1, 4, 2]) @ x)

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"The optimal solution is: x = {[np.round(x_i, 2) for x_i in x.value]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: 15.0

The optimal solution is: x = [0.0, 5.0, 0.0, 5.0]

4.18¶

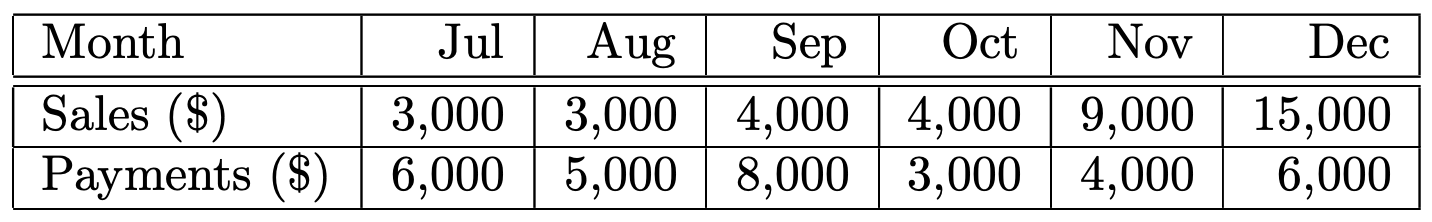

CT Gift Shop is profitable but has a cash flow problem as of the end of June. December is the busiest month, and the shop expects to make a decent profit by the end of the year. As of the end of June, the gift shop has 2, 000 in cash in the bank. At the start of each month, rent and other bills are due, and the shop has to make the payments. By the end of the month the shop will receive revenues from its sales. The projected revenues and payments are as follows:

CT Gift Shop has an excellent credit history and can take out loans to help cover the shortfalls. On July 1, the gift shop can take out a 6-month loan with a 10% interest rate for the period that is to be paid back at the end of December. The gift shop can also take out a 1-month loan at the start of each month at an interest rate of 2% per month. Formulate CT Gift Shop’s cashflow problem as an LP so to minimize the amount of interest paid. Solve the LP by the Simplex Method.

Linear Program:

\(x_1, \cdots x_6\) represent the 1-month loan amounts and \(x_7\) represents the 6-month loan amount.

Standard Form:

Standard Form with artificial variables:

Phase I Tableau:

Extra¶

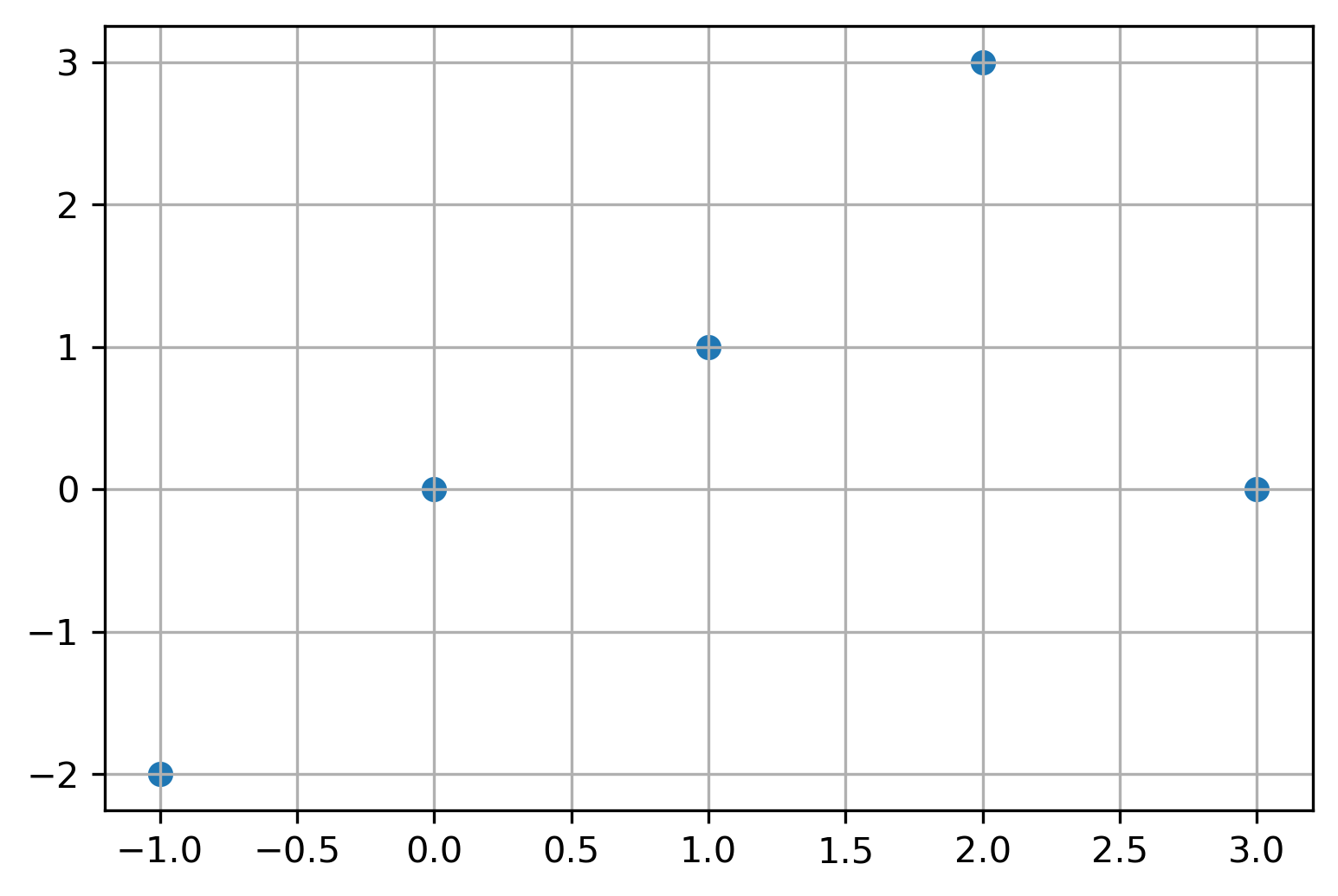

Formulate a linear program for the planar absolute-value regression problem given the 5 points: (-1,-2), (0,0), (1,1), (2,3), and (3,0). Write an AMPL code for the resulting linear program and solve it by a solver.

points = np.array(

sorted([[-1, -2], [0, 0], [1, 1], [2, 3], [3, 0]], key=lambda x: x[0])

)

plt.scatter(*list(zip(*points)))

plt.grid(True)

plt.show()

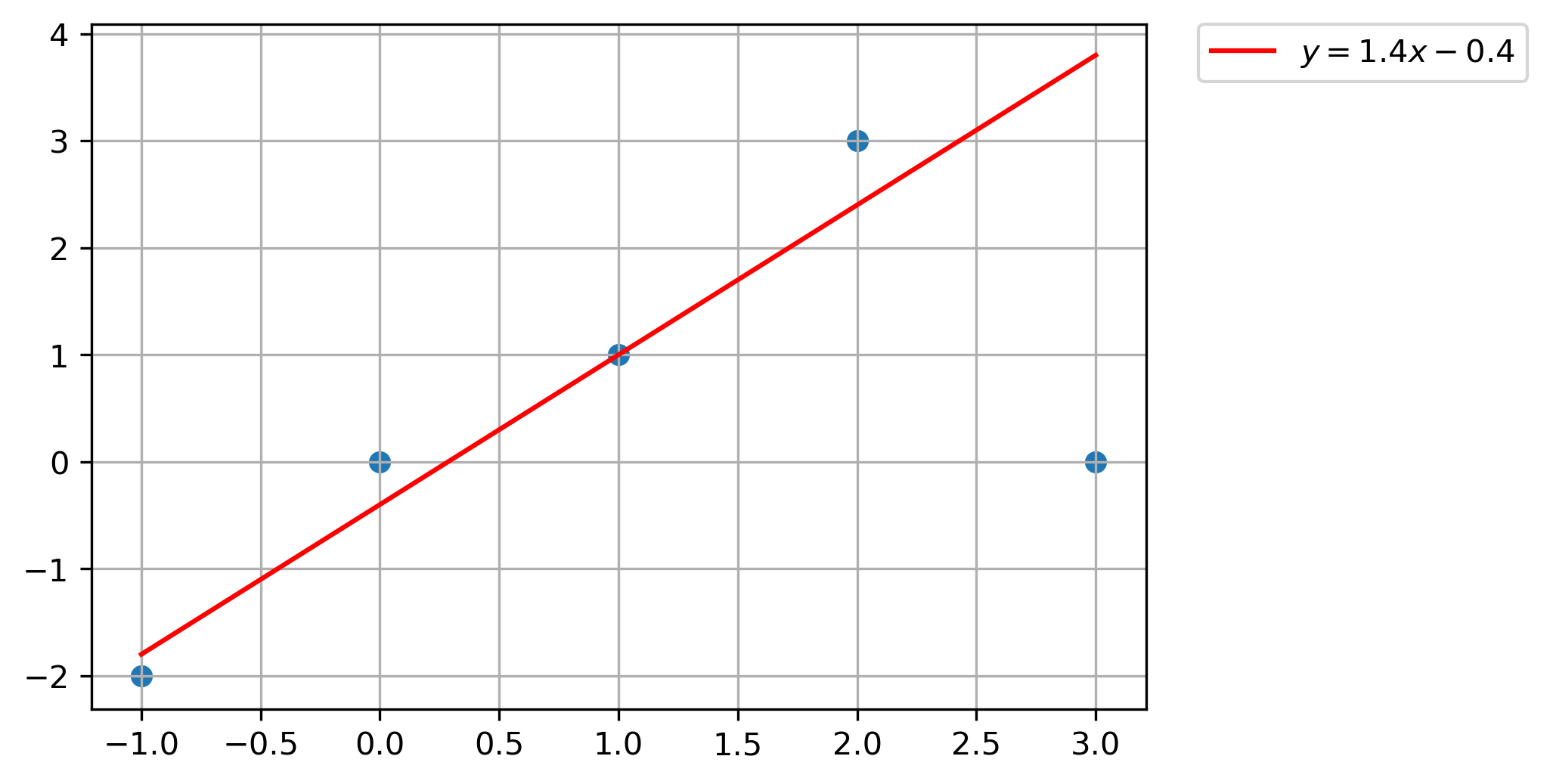

# Create optimization variables

t = cp.Variable((len(points), 2), integer=False) # Absolute Deviations

w = cp.Variable((1,), integer=False) # Weights

α = cp.Variable((1,), integer=False) # Bias

# Create constraints.

constraints = [

t @ np.array([1, -1]) + (w * points[:, 0] + α) == points[:, 1],

t >= 0,

]

# Form objective.

obj = cp.Minimize(np.ones((1, len(points))) @ (t @ np.array([[1], [1]])) / len(points))

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"Residuals: t = {np.max(np.round(t.value, 2), axis=1)}")

print(f"y = {np.round(w.value, 2)[0]}x {np.round(α.value, 2)[0]}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: 1.0

Residuals: t = [0.19 0.4 0. 0.6 3.81]

y = 1.4x -0.4

plt.plot(

np.linspace(-1, 3, 2000),

np.round(w.value, 2)[0] * np.linspace(-1, 3, 2000) + np.round(α.value, 2)[0],

c="red",

label=r"$y = 1.4x - 0.4$",

)

plt.scatter(*list(zip(*points)))

plt.grid(True)

plt.legend(bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.0)

plt.show()

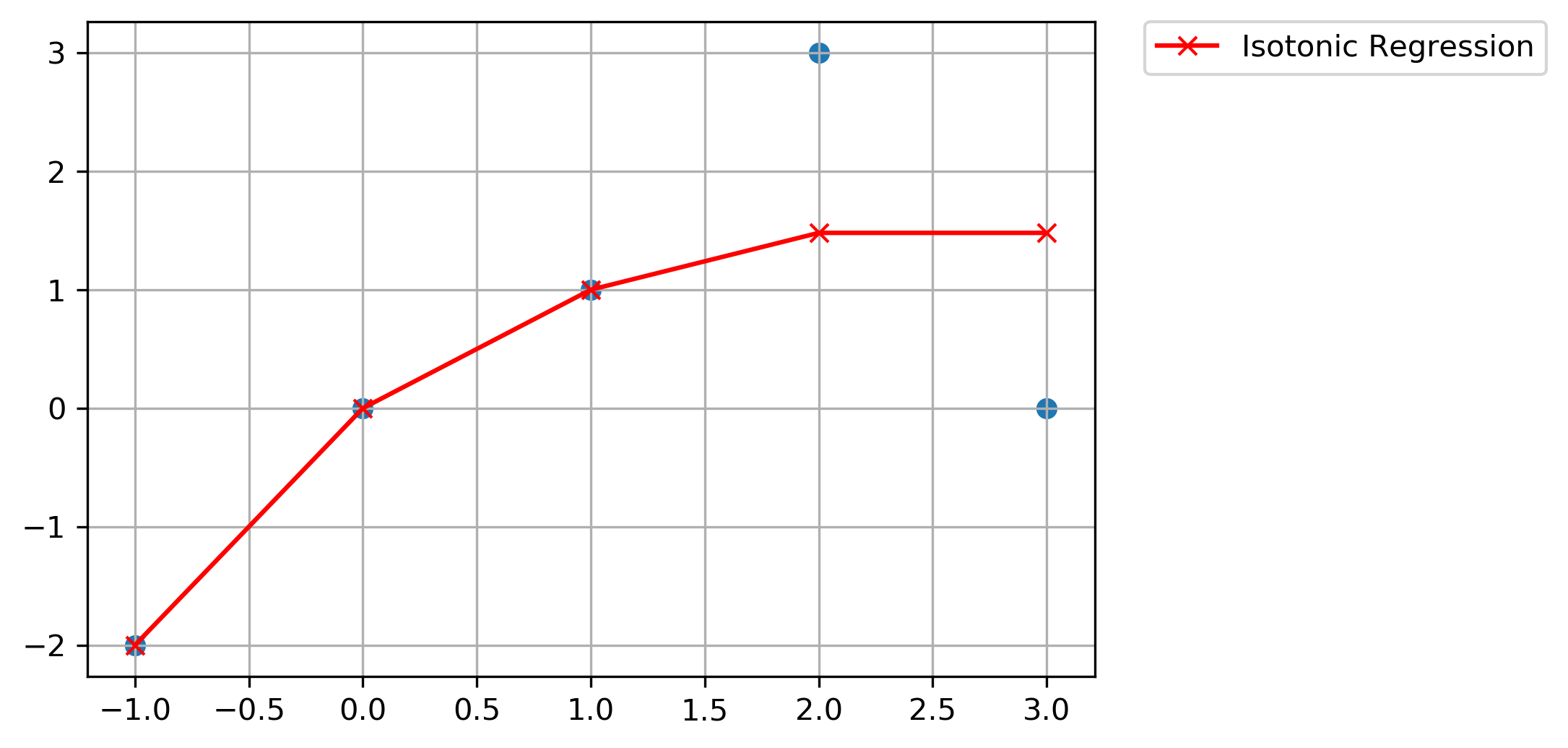

Use the same 5 points and same objective as above to determine a planar isotone (i.e, nondecreasing) curve that best fits the given points. Do the same with a convex curve instead. Solve both linear programs using AMPL.

Isotonic Regression QP (Minimize Squared errors):

Isotonic Regression (Minimize Absolute errors):

LP Formulation:

# Create optimization variables

t = cp.Variable((len(points), 2), integer=False) # Absolute Deviations

y = cp.Variable((len(points),), integer=False) # y predictions

# Create constraints.

constraints = [y_i <= y_j for y_i, y_j in zip(y, y[1:])] + [

t @ np.array([1, -1]) + y == points[:, 1],

t >= 0,

]

# Form objective.

obj = cp.Minimize(np.ones((1, len(points))) @ (t @ np.array([[1], [1]])))

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"Residuals: t = {np.max(np.round(t.value, 2), axis=1)}")

print(f"y = {np.round(y.value, 2)}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: 3.0

Residuals: t = [-0. 0. -0. 1.52 1.48]

y = [-2. -0. 1. 1.48 1.48]

plt.plot(

points[:, 0], np.round(y.value, 2), "-x", c="red", label=r"Isotonic Regression",

)

plt.scatter(*list(zip(*points)))

plt.grid(True)

plt.legend(bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.0)

plt.show()

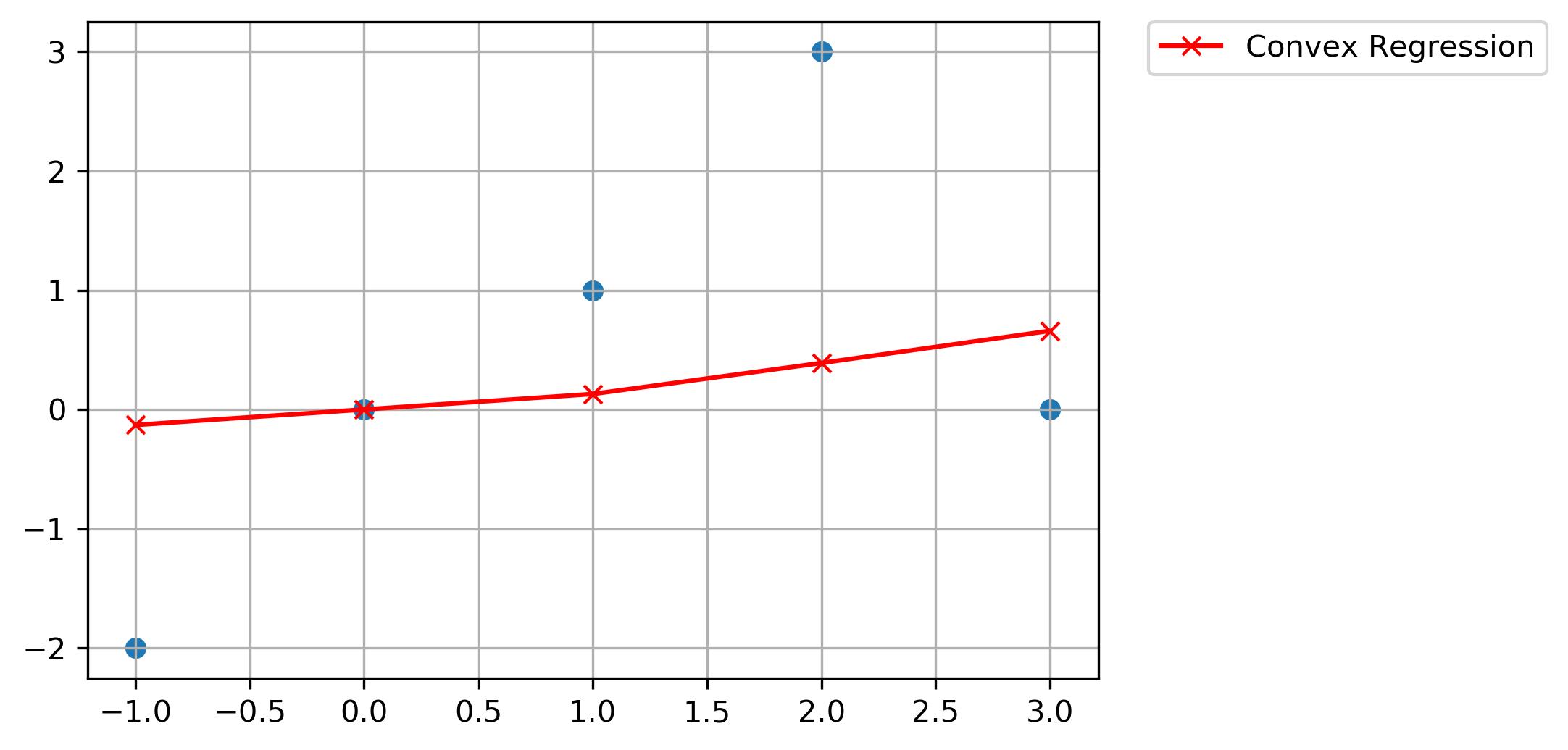

Convex Regression:

LP Formulation:

Change fractional constraints by clearing denominator:

# Create optimization variables

t = cp.Variable((len(points), 2), integer=False) # Absolute Deviations

y = cp.Variable((len(points),), integer=False) # y predictions

# Create constraints.

constraints = [

(x_j - x_k) * y_i + (x_k - x_i) * y_j + (x_i - x_j) * y_k <= 0

for y_i, y_j, y_k, x_i, x_j, x_k in zip(

y, y[1:], y[2:], points, points[1:], points[2:]

)

] + [t @ np.array([1, -1]) + y == points[:, 1], t >= 0]

# Form objective.

obj = cp.Minimize(np.ones((1, len(points))) @ (t @ np.array([[1], [1]])))

# Form and solve problem.

prob = cp.Problem(obj, constraints)

prob.solve()

print("Linear Programming Solution")

print("=" * 30)

print(f"Status: {prob.status}")

print(f"The optimal value is: {np.round(prob.value, 2)}")

print(f"Residuals: t = {np.max(np.round(t.value, 2), axis=1)}")

print(f"y = {np.round(y.value, 2)}")

Linear Programming Solution

==============================

Status: optimal

The optimal value is: 6.0

Residuals: t = [1.87 0. 0.87 2.61 0.66]

y = [-0.13 -0. 0.13 0.39 0.66]

plt.plot(

points[:, 0], np.round(y.value, 2), "-x", c="red", label=r"Convex Regression",

)

plt.scatter(*list(zip(*points)))

plt.grid(True)

plt.legend(bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.0)

plt.show()